Interview Expert

April 13, 2013 Leave a comment

We did an interview with an employee at NXP. These are the questions we asked and the answers we got :

1.What is the importance of pitch detection in speech analysis:

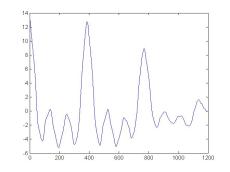

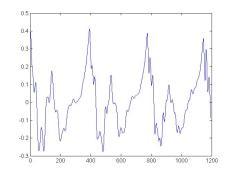

Speech analysis is very useful for noise suppression, specially if you have background noise that is non stationary and you want to filter out the human voice. by detecting the pitch you can extract the human voice out of the noisy and unclean signal. It can also be used for speaker identification, speaker detection, speaker verification of genre/music classification.

2.How can speech analysis be used to help people:

Speech analysis can be used to filter out background noise which can solve a lot of problems for people with hearing aids. Pitch shifting sounds in a unbearable range to a higher or lower range can also help people with hearing impairment.

3. Where is speech analysis currently being used:

Speech analysis is for example being used in improving the Intelligibility of certain signals. if you want to slow down a part of a spoken sentence in order to make it more intelligible. when someone is talking and to make him/her better understandable, you could process the sound to selectively slow down the parts that need to become more intelligible and speed up the other less significant parts so that at the end the speech keeps its original length. This is quite difficult to do and pitch shifting and pitch detection are key elements of such a technology.

It can also be used to help people with hearing impairment in a noisy environment. the pitch can be slowed down so the noise does not remain stationary. Now there will be parts of the noise the speech can come through, and by extending the range of speech you get more opportunity for the speech to come through.

It can also be used in mobile phones when it comes down to your voicemail. When someone left his or her telephone number, and when this person speaks to fast you may have trouble understanding the message. If you slow down the message, it becomes more easy to understand.

4.How do you test real time software:

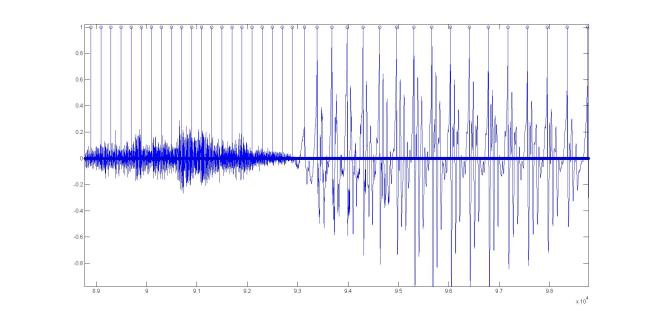

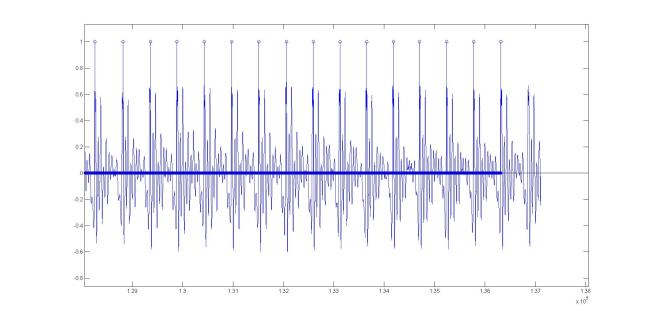

Real time software means it needs to run on a certain processor (most of the time DSP-like cores or processors). First of all you need a implementation and because most processors have a simulator environment, you can simulate the behavior of the core on your PC. This means you can also process test files which you first process on PC to obtain output files. These output files can then be compared to a certain reference. You can think of 2 types of tests: the first is a functional test where you check if the output behaves according to the requirements of the function which is to be tested. Another way of testing is called ‘bit through testing’. Here you first have a reference algorithm which is evaluated via listening tests to be sure it behaves well so you can than compare the outputs of your real-time implementation and your reference algorithm bit by bit. During this comparison a range is also defined in which the outputs can vary because they will never be exactly the same. This second way of testing is faster and easier to implement but unfortunately is also less robust because you still might find small issues that are no issues at all, or miss little issues that can cause a problems at the end.

5. What is more important: quality or computational load+memory(/speed):

Both. Where the quality of your algorithm requires you to do something up to a certain level of quality, speed requires that the job can be done with very little computational load available. When you have only a tiny DPS processor available where the computational load is limited for example, the main focus will be on getting it to fit onto the processor rather than quality. So when resources are limited, speed tends to be the main focus, but in general both are always important. Even in situations where processors have massive MIPS (millions of instructions per second) available, there will also be a massive amount of things to do which requires optimized algorithms that run very efficiently and still have very high audio performance.

6. Which sector has the most demand for this type of audio processing (voice processing):

Some sectors that demand voice processing are the Communication sector, the medical sector and the music industry.

Speech recognition in the medical sector is also becoming more important because in operation rooms people are starting to make recordings more often which are translated into reports automatically. These reports can than show all the events that took place during the operation. For this reason there is also need for speaker identification to be able to detect who said what and when.

In the music industry it can also be used by DJ’s to do beat matching or to do pitch shifting.

Recent Comments